Print(" Total URLs:", len(external_urls) + len(internal_urls)) Print(" Total External links:", len(external_urls)) Print(" Total Internal links:", len(internal_urls)) Otherwise, I'm not responsible for any harm you cause. As a result, I've added a max_urls parameter to exit when we reach a certain number of URLs checked.Īlright, let's test this make sure you use this on a website you're authorized to. However, this can cause some issues the program will get stuck on large websites (that got many links) such as. This function crawls the website, which means it gets all the links of the first page and then calls itself recursively to follow all the links extracted previously. Let's finish up the function: if not is_valid(href): Href = parsed_href.scheme + "://" + parsed_loc + parsed_href.path # remove URL GET parameters, URL fragments, etc.

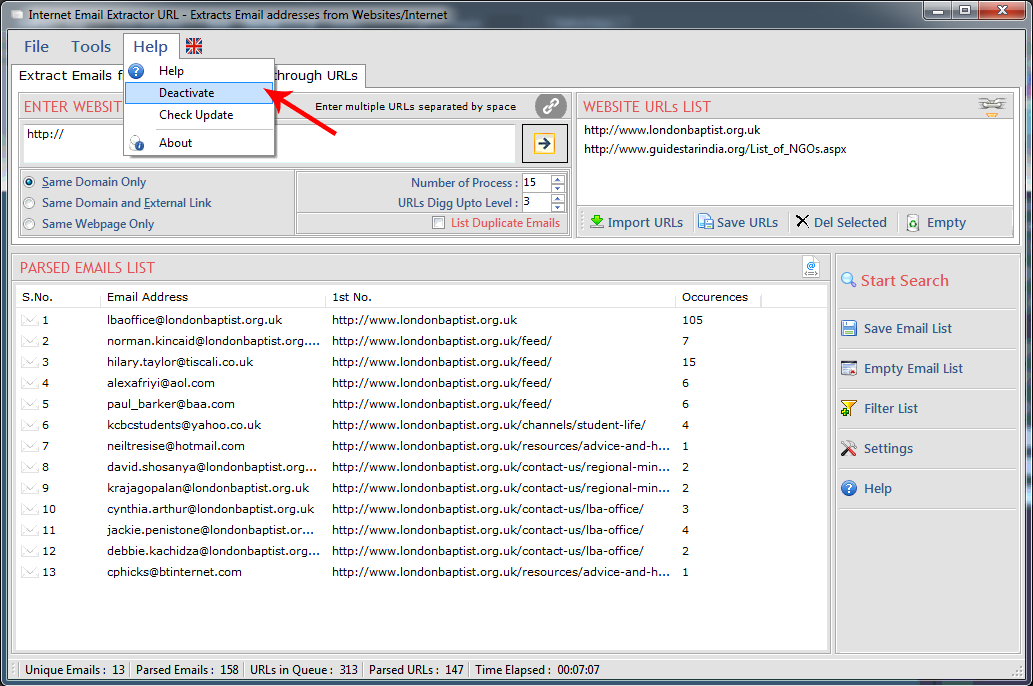

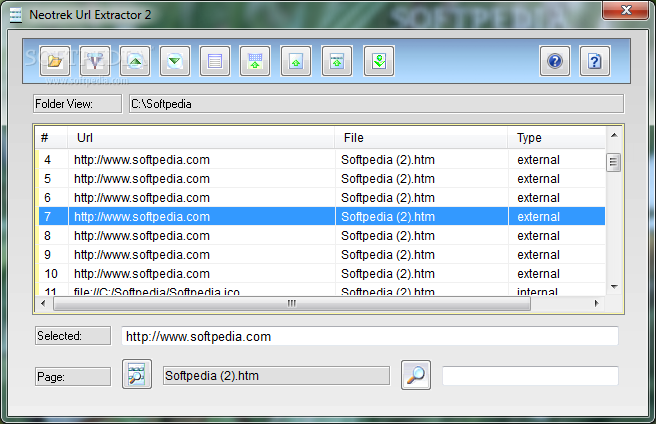

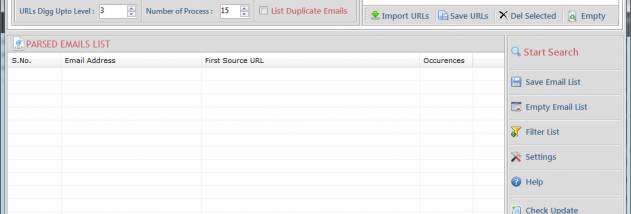

HTTP URL EXTRACTOR CODE

Now we need to remove HTTP GET parameters from the URLs, since this will cause redundancy in the set, the below code handles that: parsed_href = urlparse(href) Since not all links are absolute, we gonna need to join relative URLs with their domain name (e.g when href is "/search" and url is "", the result will be "/search"): # join the URL if it's relative (not absolute link) Otherwise, we just continue to the next link. So we get the href attribute and check if there is something there. Let's get all HTML a tags (anchor tags that contains all the links of the web page): for a_tag in soup.findAll("a"): Third, I've downloaded the HTML content of the web page and wrapped it with a soup object to ease HTML parsing.

We gonna need it to check whether the link we grabbed is external or internal. Second, I've extracted the domain name from the URL. Soup = BeautifulSoup(requests.get(url).content, "html.parser")įirst, I initialized the urls set variable I've used Python sets here because we don't want redundant links. # domain name of the URL without the protocol Returns all URLs that is found on `url` in which it belongs to the same website Now let's build a function to return all the valid URLs of a web page: def get_all_website_links(url): This will ensure that a proper scheme (protocol, e.g http or https) and domain name exist in the URL. Since not all links in anchor tags ( a tags) are valid (I've experimented with this), some are links to parts of the website, and some are javascript, so let's write a function to validate URLs: def is_valid(url):

External links are URLs that link to other websites.Internal links are URLs that link to other pages of the same website.We gonna need two global variables, one for all internal links of the website and the other for all the external links: # initialize the set of links (unique links) We are going to use colorama just for using different colors when printing, to distinguish between internal and external links: # init the colorama module Let's import the modules we need: import requestsįrom urllib.parse import urlparse, urljoin

Open up a new Python file and follow along. We'll be using requests to make HTTP requests conveniently, BeautifulSoup for parsing HTML, and colorama for changing text color.

HTTP URL EXTRACTOR INSTALL

Let's install the dependencies: pip3 install requests bs4 colorama The goal of this tutorial is to build one on your own using Python programming language. Note that there are a lot of link extractors out there, such as Link Extractor by Sitechecker.

HTTP URL EXTRACTOR HOW TO

In this tutorial, you will learn how to build a link extractor tool in Python from Scratch using only requests and BeautifulSoup libraries. It can also be used for the SEO diagnostics process or even the information gathering phase for penetration testers. It is useful to build advanced scrapers that crawl every page of a certain website to extract data. Disclosure: This post may contain affiliate links, meaning when you click the links and make a purchase, we receive a commission.Įxtracting all links of a web page is a common task among web scrapers.

0 kommentar(er)

0 kommentar(er)